During the 1970s, the first global ocean models emerged at research centers across the U.S. Back then, their construction was basic by modern standards, but like the models of today the researchers creating them aimed to simulate the world’s oceans by coding the mathematical equations of fluid motion on a sphere. Those efforts made use of the most sophisticated computing power available at the time, but realistic simulations of the ocean were years away.

Today, things have moved along.

Ocean modelers are much closer to simulating accurate representations of the real ocean, and over the past few decades their models have become incredibly realistic, with applications ranging from weather and wave forecasting, to climate and palaeoclimate research, and not least, the search for missing aircraft.

At the GEOMAR Helmholtz Centre for Ocean Research Kiel, Germany, Dr. Jonathan Durgadoo has been working with ocean models for almost 10 years. In that time he has witnessed a trend toward increasing realism in the models that he uses.

“By realistic we mean the ability for models to simulate processes in the ocean that are observed and known,” he says. “As computers get faster, more oceanic processes that occur at different scales can be included. And as we understand more and more about ocean processes, we can begin to think of ways to include them in our models.”

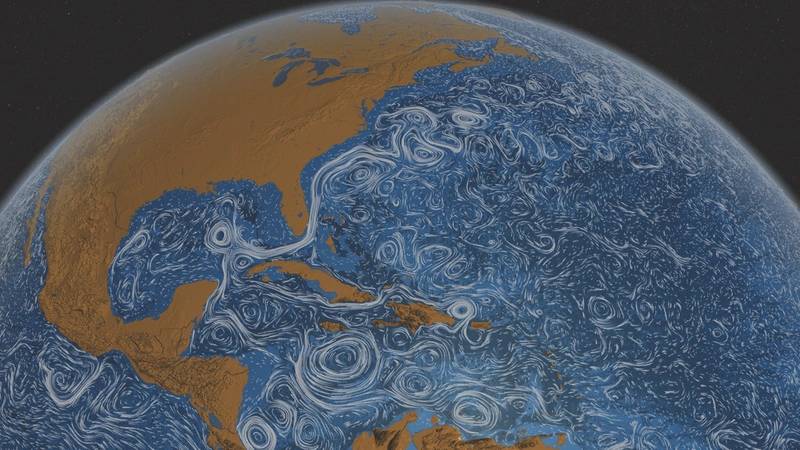

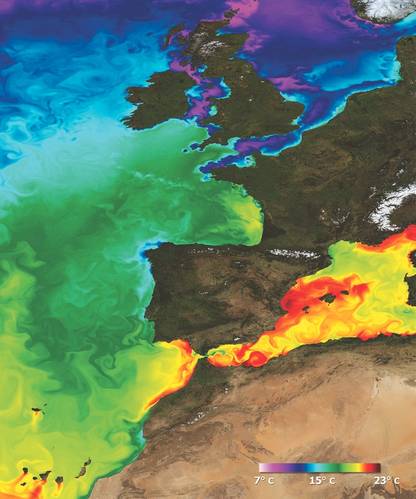

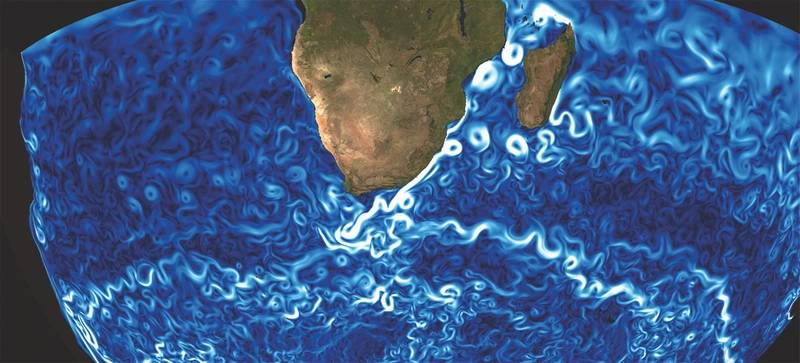

In particular, ocean models have become more realistic in recent years because of their ability to resolve eddies. Eddies are mesoscale swirling features which are caused by turbulence in the ocean. Over the past decade or so, as computational power and data storage has increased exponentially, eddy resolving ocean models have become more widespread.

Durgadoo explains that in ocean modeling, size matters. “Oceanographers generally speak of scales in space and time,” he says. “Spatially, processes in the ocean occur at scales ranging from millimeters to thousands of kilometers, and temporarily up to several centuries.”

The word mesoscale refers to structures on the order of tens to hundreds of miles. These structures, which include eddies, play many different functions in the ocean. For example, eddies capture water masses at certain locations and transport them to another, and can also trap nutrient rich water which locally promotes biological activity. So, in order for ocean models to achieve realism at these scales, eddies and other structures need to be represented.

“This is not to say that models that do not simulate these structures are useless,” adds Durgadoo. “One must understand and appreciate the usefulness of models within their limits.”

The Problem with Model Resolution

Modeling the global ocean is inherently difficult. Throughout the history of ocean model development, from the earliest models using very basic computing products by modern standards, through to the modern state-of-the-art ‘million-lines-of-code’ behemoths, researchers have struggled to deal with issues of resolution i.e. the geographical scale at which a model runs - where the smaller your grid resolution is, the better your representation of the ocean.

According to Professor Sergey Danilov, who works on ocean model development at the Climate Dynamics Division of the Alfred Wegener Institute, Bremerhaven, Germany, the main challenge has always been to make the models reproduce the water mass characteristics and circulation that we observe in the real ocean.

“Motions at small spatial and temporal scales cannot be modeled and are therefore parameterized,” he says. “This creates errors, which can accumulate over time. So, modelers try to reduce these by increasing resolution, improving the fidelity of parameterizations, or improving numerical algorithms.”

This sentiment is echoed in former MIT oceanographer Carl Wunsch’s book Modern Observational Physical Oceanography, where the author explains that no model has perfect resolution. This means that some processes are always omitted – an obstacle that nature does not face. “The user must determine whether the omission of those processes is important,” writes Wunsch. “Even were it possible to perfectly numerically represent the assumed equations, errors always exist in computer codes.”

The Search for MH370

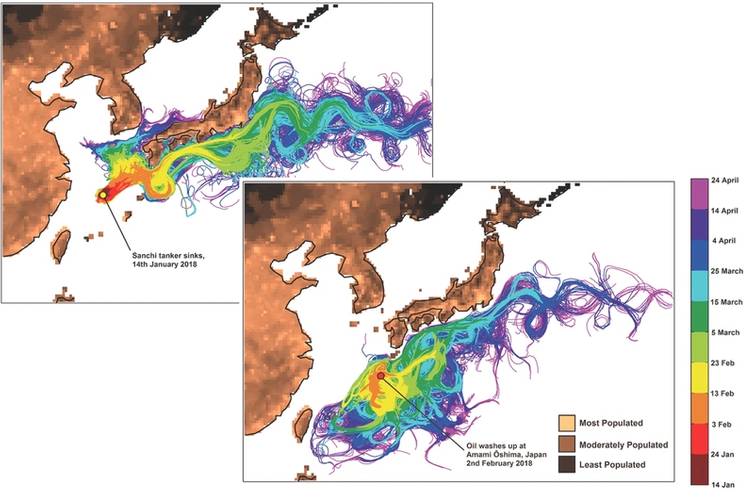

Nevertheless, scientists who specialize in ocean model development have made massive strides in their quest for perfection. When a flaperon (part of a plane wing) from missing Malaysian airlines flight MH370 turned up on La Réunion Island in the Indian Ocean in July 2015, Dr. Durgadoo and his colleagues had a brilliant idea. By using their state-of-the-art ocean model, they reasoned that it should be possible to help find out where the plane had crashed.

“The mere fact that debris belonging to MH370 had been found on beaches of the Indian Ocean suggested that they floated for months on the ocean surface,” he says. “In theory, given the right information, trajectories could be simulated in the hope to locate the flaperon’s possible start position, and hence shed some light on the location of the demised aircraft.”

And that’s exactly what they did. By using their model and back tracking the debris using a method called Lagrangian analysis, the researchers were able to estimate the location of the plane. Durgadoo described the process in a 2016 article. “The idea was that we could use an ocean model to track the flaperon back in time to establish the flight’s crash location. But the ocean is a chaotic place; it makes no sense to simulate the path of a single ‘virtual flaperon’ backward in time. Therefore a ‘strength in numbers’ strategy is what we used when we placed close to five million virtual model flaperons around La Réunion Island during the model month of July 2015.”

And their results were remarkable. According to Durgadoo, “while it is impossible to pinpoint an exact location, we found that the origin of the flaperon is likely to be to the west rather than southwest of Australia. More importantly, based on our analysis, the chance that the flaperon started its journey from the priority search area is less than 1.3 percent.”

The team had used their model to conclude that search efforts along the priority zone were highly unlikely to achieve success in finding the aircraft. Indeed, with the plane still missing today, the fate of flight MH370 remains a mystery.

[Editor’s note: Since the author’s writing, the search for MH370 has resumed]

Technology Drives Model Advances

For ocean models to have reached this level of sophistication today, the technology driving their development has had to have been wide ranging; from the observational units deployed at sea for acquiring accurate data, to the state-of-the-art supercomputers used to make future predictions.

“Developments on the computer hardware side allows one to use more resources,” says Professor Danilov, “meaning we can explicitly resolve processes that were previously parameterized. There is hope that new computational technologies involving GPUs – Graphics Processing Units – will lead to an increase of model throughput.”

“On the physical side,” he adds, “new data are becoming available through modern technology, helping to better tune or constrain parameterizations used in the models. Satellite altimetry and Argo floats are of particular importance.”

But Danilov points out that progress in computational power is the main driver at present. Running global models at a high resolution – around one kilometer grid size – is already possible, meaning processes down to that level are being resolved.

“Models that resolve mesoscale motions will become a reality in the foreseeable future,” he says. “But such model runs are still too computationally expensive, meaning they take a lot of time to run and generate a lot of data. So, the distinction should be made between what is possible in principle, and what can be used as a research tool.”

In fact he believes that the future of ocean modeling may follow a similar pathway to that of weather prediction, where ensembles of model runs are performed to get a feeling for multiple potential future states of the ocean – not just one.

“The problem is,” he says, “that even with perfect initial data, there is a horizon of predictability, because after a certain time, prediction becomes more difficult. The ocean has intricate internal dynamics – which are chaotic – and so a numerically simulated ocean will diverge from observations over time.”

“Better numerics and parameterization will improve the ocean’s predicted mean state and variability,” he says. “But the overall computational effort is rather big.”

“So our ability to simulate the ocean will improve, but gradually.”

December 2025

December 2025