LARS: Not Just a Simple Handling Tool

, less capable ones. The smaller swarm members need to be able to navigate underwater but not necessarily localize globally underwater. They could just localize to where they last surfaced or descended.The recovering autonomous surface vessel needs to be a much more capable platform, perhaps with machine vision as well as GPS and the ability to detect acoustic signals. “You’re investing more in the autonomous systems on that vehicle, but you need significantly fewer of them,” he says. This lets the inexpensive AUVs benefit from the extra capability of the surface vessel, while still

Shipwreck Windfall: ROV Expedition Captures Maritime History

; said Pettus. “And the body and the skid that we were able to integrate onto the HD3 robot allowed us to have all of those sensors to create those 3D models.” Adding the Discovery Stereo Camera from Voyis sealed the deal, allowing for a 4K video stream and still images that were ready for machine vision and 3D reconstruction.The Discovery Stereo camera from Voyis. Credit: Marley ParkerThe vehicle is rated for 300 meters, ideal for shallow-to-mid-depth lake and coastal missions. It’s also compact—transportable in Pelican cases and deployable from small boats—making it perfect

Sea.AI Technology Supports European Initiative to Protect Whales

the European Union’s ATLANTIC WHALE DEAL project.Whales face increasing threats from ship collisions in busy maritime routes. The ATLANTIC WHALE DEAL brings together scientists, conservationists and technology experts from across Europe to tackle the problem.Sea.AI will provide its AI-powered machine vision technology which detects and classifies objects on the water’s surface. This technology will enable scientists from IWDG and ULL to monitor and track surfacing whales, gather valuable data and develop real-time solutions to prevent ship strikes.Sea.AI’s machine vision system provides

DeepSea Launches SmartSight MV100 Machine Vision Camera

DeepSea Power & Light has launched the first in its line of cameras for machine vision applications: the SmartSight™ MV100.The SmartSight MV100 leverages machine learning and AI to bring object recognition, navigation, inspection technology, and other value-add autonomous solutions to the subsea market.The SmartSight MV100 camera includes a Gigabit Ethernet interface for easy integration with existing platforms and a low power consumption, making it well suited for autonomous vehicles. It weighs only 0.44 kg (flat) / 0.37 kg (dome) in water, allowing it to fit virtually anywhere. The MV100

Teledyne Unveils 16K, 1-Megahertz TDI Line Scan Camera

, or 16 Gigapixels per second data throughput.The Linea HS2 features a highly sensitive Backside Illuminated (BSI) multi-array charge-domain TDI CMOS sensor with 16k/5 µm resolution and optimized Quantum Efficiency, which Teledyne DALSA says meets the rigorous demands of current and future machine vision applications.The multi-array TDI sensor architecture allows the camera to be configured for superior image quality with maximized line rate, dynamic range, or full well, according to specific application requirements. This makes it particularly ideal for life science applications, says the company

Voyis Discovery Camera Integrated with Deep Trekker REVOLUTION ROV

generating high-resolution still images and IMU data. The resulting assets can be processed through edge computing to produce intricate 3D models. These capabilities find applications in intelligent ROV piloting and comprehensive inspections. The cameras produce clear stills suitable for advanced machine vision and 3D modeling, complemented by real-time image enhancement, an ultra-wide field of view (130°x130°), and distortion correction for comprehensive situational awareness. Designed with compactness in mind and incorporating integrated lights, along with DDS data architecture and ROS2 support

DeepOcean Deploys Its First Autonomous Drone for Inspection of Offshore Structures

MK2 ROV from Argus Remote Systems, with upgraded hardware and software packages. Argus is responsible for AID platform and navigation algorithm. DeepOcean is responsible for the digital twin platform, mission planner software and live view of the AID in operation, while Vaarst is responsible for machine vision camera “Subslam 2x” for autonomous navigation and data collection.The AID measures 1.25 x 0.85 x 0.77 meters and weighs 320 kilograms in air and can operate in water depths down to 3,000 meters.The inertial navigation system selected from Sonardyne is the Sprint Navigator mini 4K

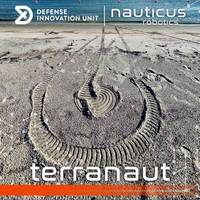

Nauticus Robotics' Robotic AI Mine-Detection Project Passes First Phase at Defense Innovation Unit

that its novel implementation included a robot capable of not only swimming, but also crawling out of the surf and onto the beach. "The solution utilizes the company’s autonomous command and control software platform, ToolKITT, and combines several mature technologies such as machine vision, autonomous mission planning, and acoustic data networking onto an amphibious robotic vehicle hull that can collect intelligence and identify potential hazards. ToolKITT, which also serves as the foundation of Nauticus’ flagship robot Aquanaut, was specifically designed to enable autonomous

Voyis Launches Advanced Vision System for Subsea ROVs

capability in underwater exploration and inspection applications. The Discovery Vision Systems represent a significant leap forward in the performance of ROV vision systems, delivering simultaneous 4K video and 3D data streams. We believe this system will become the standard platform for underwater machine vision and autonomy, helping to advance a revolution in subsea robotics."

August 2025

August 2025